Björn Browatzki

Computer Vision Researcher

Machine/Deep Learning Engineer

Hi there, I’m Björn (비연). I’m a computer scientist and engineer. I create software to perceive and understand the world around us.

During my PhD at the MPI in Tübingen, I developed multimodal object perception skills for robots in collaboration with the IIT in Genoa and Fraunhofer IPA. Later, I joined the interactive video startup Wirewax in London where I had the pleasure to work on many fun computer vision problems including Face Identification, Nudity Detection 👀 and Object Tracking.

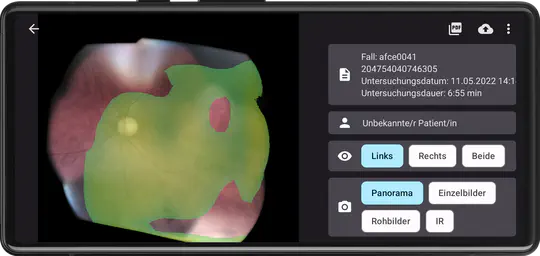

In 2018, I moved to Seoul for a position at Korea University. My work mostly revolved around decoding, encoding, recoding, and analysing large amounts of facial data. At that time, I also started with the analysis of retinal images. This project eventually turned into eye2you GmbH, a medtech startup that was set out to create a smart and portable fundus camera for a wide range of medical applications.

I have a particular interest in few-shot settings and semi-supervised approaches using unsupervised generative methods.

Finally, I’m also a running enthusiast (=addict). Whenever I can, I’ll be somewhere on a track or on a trail.

🔹 Spaces over tabs 🔹 VI over Emacs 🔹 C++ over Java 🔹 Light mode over dark mode

- Faces, Eyes, Bodies

- Medical AI

- Robotic Vision

PhD (Dr.-Ing.) in Computer Vision, 2014

Max Planck Institute for Biological Cybernetics

MSc (Dipl.-Inf.) in Software Engineering, 2009

University of Stuttgart

Projects

Publications

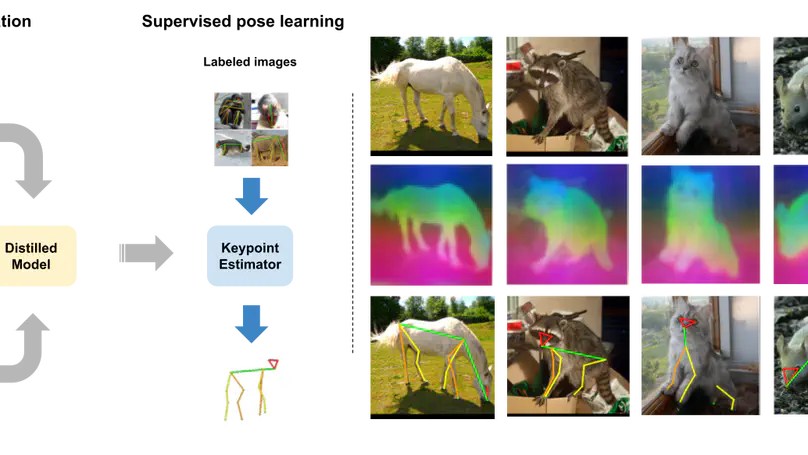

Animal pose estimation is an important ingredient of many real-world applications in behavior monitoring, agriculture, and wildlife conservation. At the same time it is a highly challenging subject as annotated datasets are scarce and noisy, and variability both within and across animal species in pose and appearance is significant. Here, we propose a novel framework addressing these challenges via distillation of self-supervised vision transformer (DINOv2) features. These features are extracted in a first stage on unlabeled data to produce an image-based attention map. An image encoder-decoder network is next used to predict these attention maps through distillation via supervised training. During this stage, augmentation is employed to enhance robustness across changes in viewing conditions. To learn keypoint locations, we remove the decoder part of the distilled network and train a new heatmap decoder that uses the same inputs to predict location heatmaps. The final keypoint prediction is done via the distilled image encoder and the heatmap decoder. Using this targeted distillation approach, we achieve new state-of-the-art results on AP-10K and TigDog datasets especially in zero-shot and few-shot settings, but also for fully-supervised training.

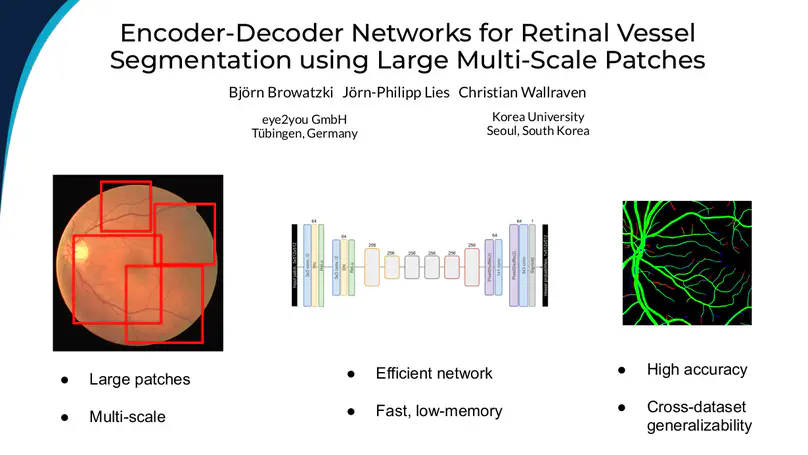

We propose an encoder-decoder framework for the segmentation of blood vessels in retinal images that relies on the extraction of large-scale patches at multiple image-scales during training. Experiments on three fundus image datasets demonstrate that this approach achieves state-of-the-art results and can be implemented using a simple and efficient fully-convolutional network with a parameter count of less than 0.8M. Furthermore, we show that this framework - called VLight - avoids overfitting to specific training images and generalizes well across different datasets, which makes it highly suitable for real-world applications where robustness, accuracy as well as low inference time on high-resolution fundus images is required.

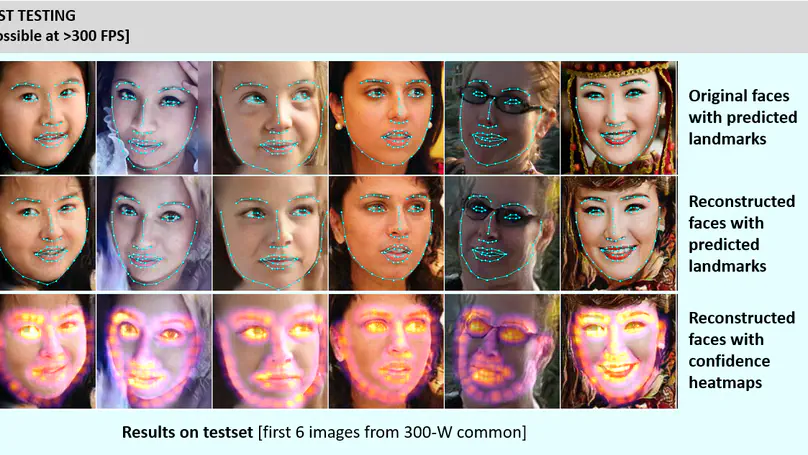

Current supervised methods for facial landmark detection require a large amount of training data and may suffer from overfitting to specific datasets due to the massive number of parameters. […]

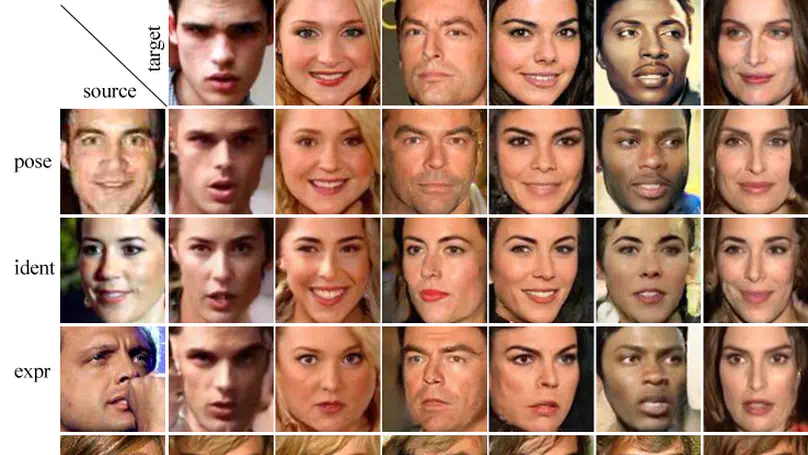

Current solutions to discriminative and generative tasks in computer vision exist separately and often lack interpretability and explainability. Using faces as our application domain, here we present an architecture that is based around two core ideas that address these issues: first, our framework learns an unsupervised, low-dimensional embedding of faces using an adversarial autoencoder that is able to synthesize high-quality face images. Second, a supervised disentanglement splits the low-dimensional embedding vector into four sub-vectors, each of which contains separated information about one of four major face attributes (pose, identity, expression, and style) that can be used both for discriminative tasks and for manipulating all four attributes in an explicit manner. The resulting architecture achieves state-of-the-art image quality, good discrimination and face retrieval results on each of the four attributes, and supports various face editing tasks using a face representation of only 99 dimensions. Finally, we apply the architecture’s robust image synthesis capabilities to visually debug label-quality issues in an existing face dataset.

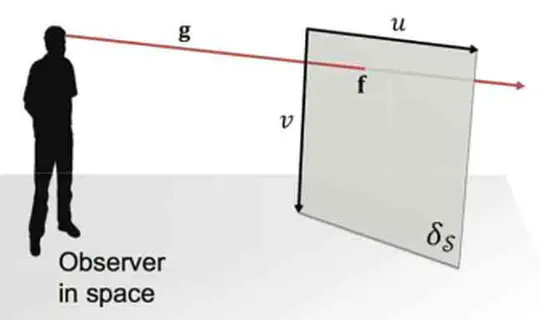

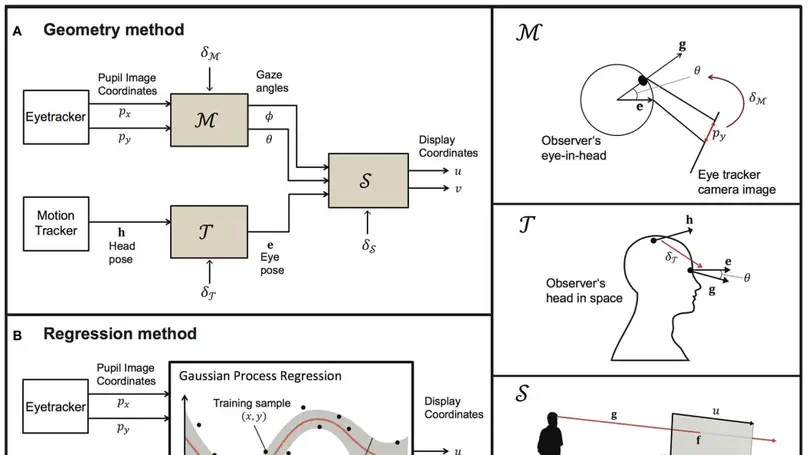

Video-based gaze-tracking systems are typically restricted in terms of their effective tracking space. This constraint limits the use of eyetrackers in studying mobile human behavior. Here, we compare two possible approaches for estimating the gaze of participants who are free to walk in a large space whilst looking at different regions of a large display. Geometrically, we linearly combined eye-in-head rotations and head-in-world coordinates to derive a gaze vector and its intersection with a planar display, by relying on the use of a head-mounted eyetrackerand body-motion tracker. Alternatively, we employed Gaussian process regression to estimate the gaze intersection directly from the input data itself. Our evaluation of both methods indicates that a regression approach can deliver comparable results to a geometric approach. The regression approach is favored, given that it has the potential for further optimization, provides confidence bounds for its gaze estimates and offers greater flexibility in its implementation. Open-source software for the methods reported here is also provided for user implementation.

Contact Me

- Tübingen, Germany